Parallel Computing Basics

Parallel computing means to employ several processing units simultaneously.

Basically all kinds of parallel processing have inefficiencies (parallel overhead). This page explains how to keep overheads small for

Parallelized programs

Today much software is parallelized, i.e. it is programmed to run on several processors. Parallelization can be implemented for

- a single multi-processor computer with threads or

- multiple computers by using several processes.

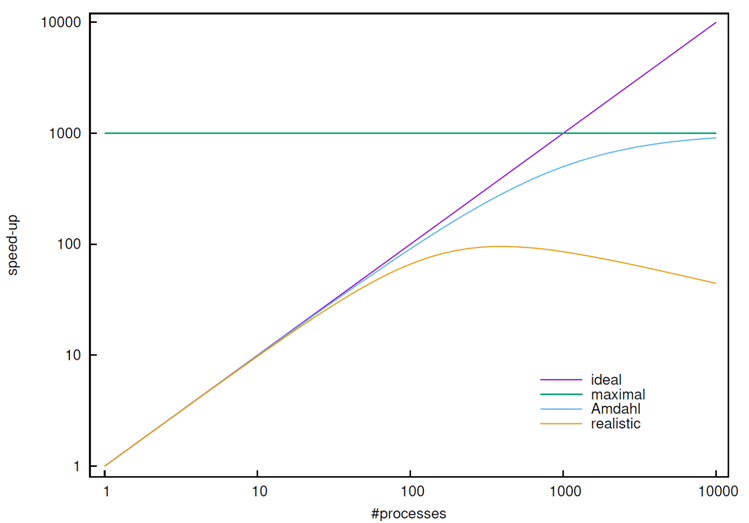

All parallel programs have parallelization overheads. As a consequence the speedup achieved when running the program on N processors is usually smaller than N. It is important to know that for (very) large numbers of processors the parallel program will run slower than on a single processor! Even if parallelization overhead is neglected speedup is limited according to Amdahl’s law. The consequence is that

for all parallel computations an optimal number of processors

to run on should be determined!

Scaling of parallel programs and Amdahl’s law

An optimum can be found by studying scaling, i.e. the speedup as a function of the number of processors used. The speedup that can be achieved depends on the problem (and to some degree on the parallel implementation). For illustration the plot below shows realistic scaling behaviour (yello curve) together with scaling predicted by Amdahl’s law (blue curve, for a program that is parallelized to 99.9%). From the plot one can see that in this case resources would be wasted for processor counts larger than ≈ 100.

Embarrassingly parallel problems

A trivially or embarrassingly parallel problem consists of computational tasks that can be executed independently of each other. Such tasks can trivially be executed simultaneously. A typical case is where a single program processes many data sets.

Processing a few tasks in parallel

In a batch script simultaneous execution of such a program can be programmed like this:

/path/to/executable-program input1 output1&/path/to/executable-program input2 output2&...waitall

Explanations:

- The ampersand

& - The

waitall

Processing many tasks in parallel

For processing many tasks in parallel we recommend our tool jobber. jobber can process a list of tasks specified as one-liners in a file. If a task is finished jobber automatically starts the next unworked task from the list until a given number of tasks is completed or a time limit is reached. In a follow-up job it can continue processing the task list at the point where it stopped.

A classic tool for processing many tasks in parallel is GNU parallel. We find jobber more intuitive and flexible.

Load balancing

If execution times of tasks vary considerably load balancing can become important. The case to be avoided is a single task (or a few tasks) running much longer that the other tasks, because then a considerable amount of processors might be unused for a relatively long time. If a few tasks take shorter than the rest the amount of unused compute capacity is likely to be negligible. If it is possible to estimate the execution time of individual tasks the problem can be reduced by sorting the tasks longest task first.